Introduction to Parallelism

Overview

Teaching: 15 min

Exercises: 0 minQuestions

What is parallelisation and parallel programming?

How do MPI and OpenMP differ?

Which parts of a program are amenable to parallelisation?

How do we characterise the classes of problems to which parallelism can be applied?

How should I approach parallelising my program?

Objectives

Describe what the Message Passing Interface (MPI) is

Use the MPI API in a program

Compile and run MPI applications

Use MPI to coordinate the use of multiple processes across CPUs

Submit an MPI program to the Slurm batch scheduler

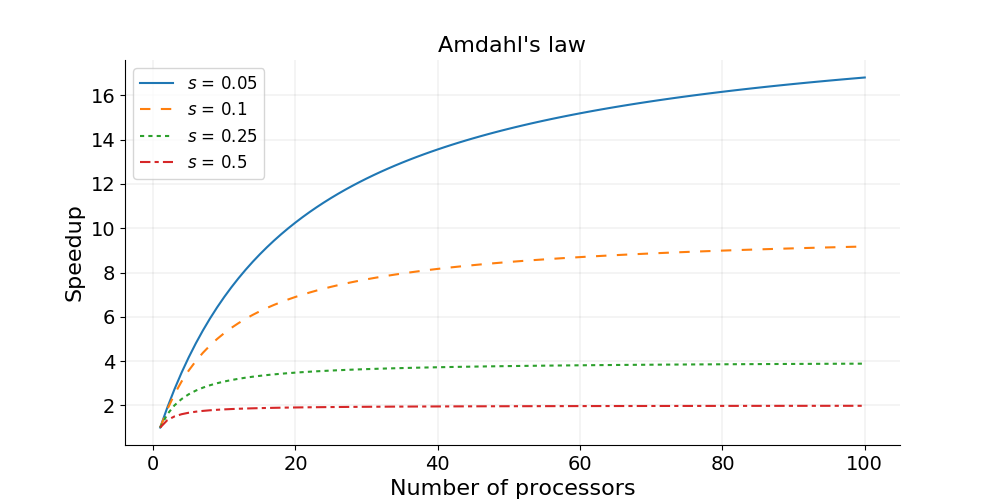

Parallel programming has been important to scientific computing for decades as a way to decrease program run times, making more complex analyses possible (e.g. climate modeling, gene sequencing, pharmaceutical development, aircraft design). During this course you will learn to design parallel algorithms and write parallel programs using the MPI library. MPI stands for Message Passing Interface, and is a low level, minimal and extremely flexible set of commands for communicating between copies of a program. Before we dive into the details of MPI, let’s first familiarize ourselves with key concepts that lay the groundwork for parallel programming.

What is Parallelisation?

At some point in your career, you’ve probably asked the question “How can I make my code run faster?”. Of course, the answer to this question will depend sensitively on your specific situation, but here are a few approaches you might try doing:

- Optimize the code.

- Move computationally demanding parts of the code from an interpreted language (Python, Ruby, etc.) to a compiled language (C/C++, Fortran, Julia, Rust, etc.).

- Use better theoretical methods that require less computation for the same accuracy.

Each of the above approaches is intended to reduce the total amount of work required by the computer to run your code. A different strategy for speeding up codes is parallelisation, in which you split the computational work among multiple processing units that labor simultaneously. The “processing units” might include central processing units (CPUs), graphics processing units (GPUs), vector processing units (VPUs), or something similar.

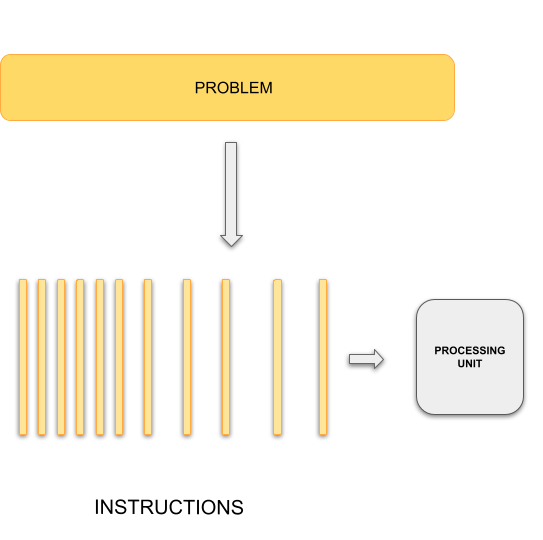

Typical programming assumes that computers execute one operation at a time in the sequence specified by your program code. At any time step, the computer’s CPU core will be working on one particular operation from the sequence. In other words, a problem is broken into discrete series of instructions that are executed one for another. Therefore only one instruction can execute at any moment in time. We will call this traditional style of sequential computing.

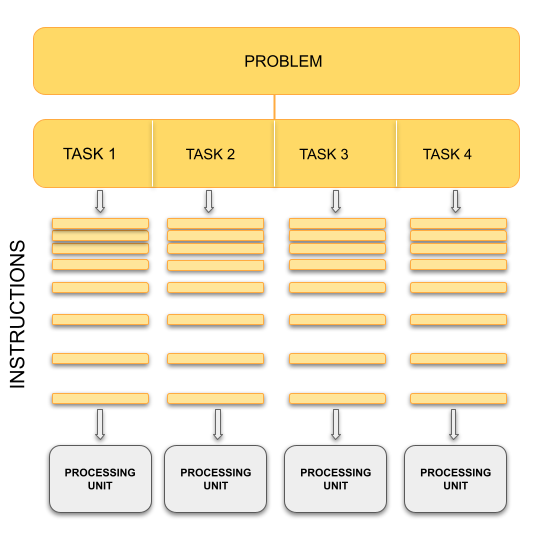

In contrast, with parallel computing we will now be dealing with multiple CPU cores that each are independently and simultaneously working on a series of instructions. This can allow us to do much more at once, and therefore get results more quickly than if only running an equivalent sequential program. The act of changing sequential code to parallel code is called parallelisation.

Sequential Computing

|

Parallel Computing

|

Analogy

The basic concept of parallel computing is simple to understand: we divide our job in tasks that can be executed at the same time so that we finish the job in a fraction of the time that it would have taken if the tasks are executed one by one.

Suppose that we want to paint the four walls in a room. This is our problem. We can divide our problem in 4 different tasks: paint each of the walls. In principle, our 4 tasks are independent from each other in the sense that we don’t need to finish one to start another. However, this does not mean that the tasks can be executed simultaneously or in parallel. It all depends on on the amount of resources that we have for the tasks.

If there is only one painter, they could work for a while in one wall, then start painting another one, then work a little bit on the third one, and so on. The tasks are being executed concurrently but not in parallel and only one task is being performed at a time. If we have 2 or more painters for the job, then the tasks can be performed in parallel.

Key idea

In our analogy, the painters represent CPU cores in the computers. The number of CPU cores available determines the maximum number of tasks that can be performed in parallel. The number of concurrent tasks that can be started at the same time, however is unlimited.

Parallel Programming and Memory: Processes, Threads and Memory Models

Splitting the problem into computational tasks across different processors and running them all at once may conceptually seem like a straightforward solution to achieve the desired speed-up in problem-solving. However, in practice, parallel programming involves more than just task division and introduces various complexities and considerations.

Let’s consider a scenario where you have a single CPU core, associated RAM (primary memory for faster data access), hard disk (secondary memory for slower data access), input devices (keyboard, mouse), and output devices (screen).

Now, imagine having two or more CPU cores. Suddenly, you have several new factors to take into account:

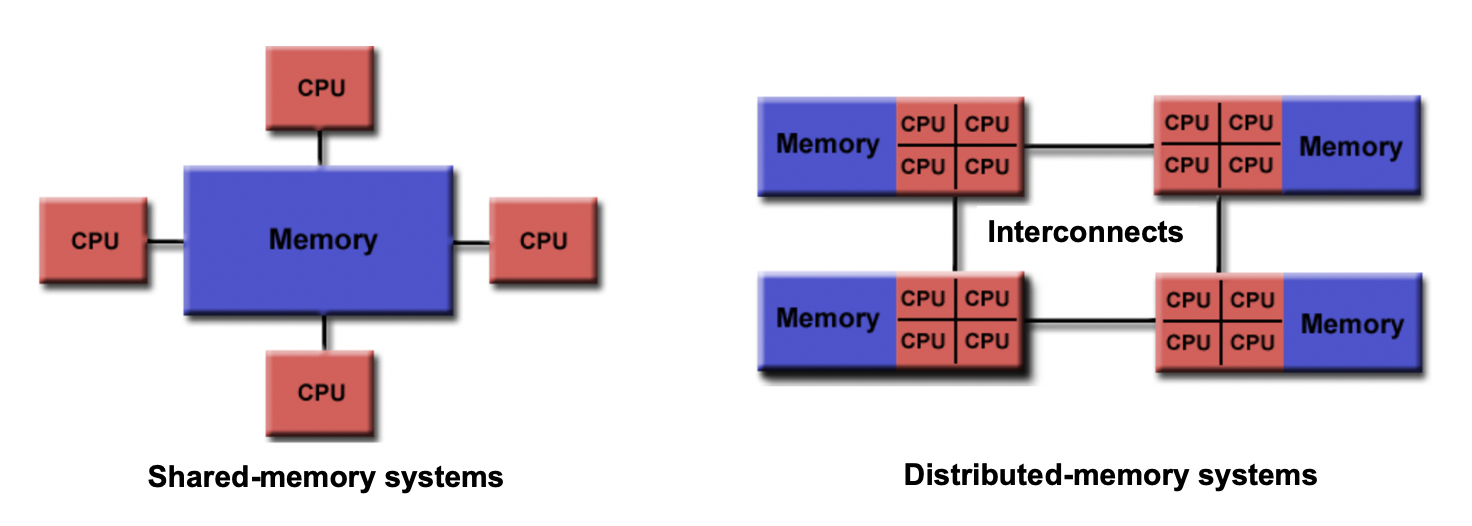

- If there are two cores, there are two possibilities: either these cores share the same RAM (shared memory) or each core has its own dedicated RAM (private memory).

- In the case of shared memory, what happens when two cores try to write to the same location simultaneously? This can lead to a race condition, which requires careful handling by the programmer to avoid conflicts.

- How do we divide and distribute the computational tasks among these cores? Ensuring a balanced workload distribution is essential for optimal performance.

- Communication between cores becomes a crucial consideration. How will the cores exchange data and synchronize their operations? Effective communication mechanisms must be established.

- After completing the tasks, where should the final results be stored? Should they reside in the storage of Core 1, Core 2, or a central storage accessible to both? Additionally, which core is responsible for displaying output on the screen?

These considerations highlight the interplay between parallel programming and memory. To efficiently utilize multiple CPU cores, we need to understand the concepts of processes and threads, as well as different memory models—shared memory and distributed memory. These concepts form the foundation of parallel computing and play a crucial role in achieving optimal parallel execution.

To address the challenges that arise when parallelising programs across multiple cores and achieve efficient use of available resources, parallel programming frameworks like MPI and OpenMP (Open Multi-Processing) come into play. These frameworks provide tools, libraries, and methodologies to handle memory management, workload distribution, communication, and synchronization in parallel environments.

Now, let’s take a brief look at these fundamental concepts and explore the differences between MPI and OpenMP, setting the stage for a deeper understanding of MPI in the upcoming episodes

Processes

A process refers to an individual running instance of a software program. Each process operates independently and possesses its own set of resources, such as memory space and open files. As a result, data within one process remains isolated and cannot be directly accessed by other processes.

In parallel programming, the objective is to achieve parallel execution by simultaneously running coordinated processes. This naturally introduces the need for communication and data sharing among them. To facilitate this, parallel programming models like MPI come into effect. MPI provides a comprehensive set of libraries, tools, and methodologies that enable processes to exchange messages, coordinate actions, and share data, enabling parallel execution across a cluster or network of machines.

Threads

A thread is an execution unit that is part of a process. It operates within the context of a process and shares the process’s resources. Unlike processes, multiple threads within a process can access and share the same data, enabling more efficient and faster parallel programming.

Threads are lightweight and can be managed independently by a scheduler. They are units of execution in concurrent programming, allowing multiple threads to execute at the same time, making use of available CPU cores for parallel processing. Threads can improve application performance by utilizing parallelism and allowing tasks to be executed concurrently.

One advantage of using threads is that they can be easier to work with compared to processes when it comes to parallel programming. When incorporating threads, especially with frameworks like OpenMP, modifying a program becomes simpler. This ease of use stems from the fact that threads operate within the same process and can directly access shared data, eliminating the need for complex inter-process communication mechanisms required by MPI. However, it’s important to note that threads within a process are limited to a single computer. While they provide an effective means of utilizing multiple CPU cores on a single machine, they cannot extend beyond the boundaries of that computer.

Analogy

Let’s go back to our painting 4 walls analogy. Our example painters have two arms, and could potentially paint with both arms at the same time. Technically, the work being done by each arm is the work of a single painter. In this example, each painter would be a “process” (an individual instance of a program). The painters’ arms represent a “thread” of a program. Threads are separate points of execution within a single program, and can be executed either synchronously or asynchronously.

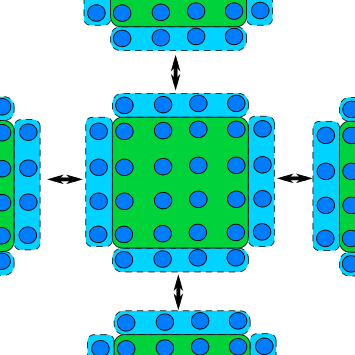

Shared vs Distributed Memory

Shared memory refers to a memory model where multiple processors can directly access and modify the same memory space. Changes made by one processor are immediately visible to all other processors. Shared memory programming models, like OpenMP, simplify parallel programming by providing mechanisms for sharing and synchronizing data.

Distributed memory, on the other hand, involves memory resources that are physically separated across different computers or nodes in a network. Each processor has its own private memory, and explicit communication is required to exchange data between processors. Distributed memory programming models, such as MPI, facilitate communication and synchronization in this memory model.

Differences/Advantages/Disadvantages of Shared and Distributed Memory

- Accessibility: Shared memory allows direct access to the same memory space by all processors, while distributed memory requires explicit communication for data exchange between processors.

- Memory Scope: Shared memory provides a global memory space, enabling easy data sharing and synchronization. In distributed memory, each processor has its own private memory space, requiring explicit communication for data sharing.

- Memory Consistency: Shared memory ensures immediate visibility of changes made by one processor to all other processors. Distributed memory requires explicit communication and synchronization to maintain data consistency across processors.

- Scalability: Shared memory systems are typically limited to a single computer or node, whereas distributed memory systems can scale to larger configurations with multiple computers and nodes.

- Programming Complexity: Shared memory programming models offer simpler constructs and require less explicit communication compared to distributed memory models. Distributed memory programming involves explicit data communication and synchronization, adding complexity to the programming process.

Analogy

Imagine that all workers have to obtain their paint form a central dispenser located at the middle of the room. If each worker is using a different colour, then they can work asynchronously. However, if they use the same colour, and two of them run out of paint at the same time, then they have to synchronise to use the dispenser — one should wait while the other is being serviced.

Now let’s assume that we have 4 paint dispensers, one for each worker. In this scenario, each worker can complete their task totally on their own. They don’t even have to be in the same room, they could be painting walls of different rooms in the house, in different houses in the city, and different cities in the country. We need, however, a communication system in place. Suppose that worker A, for some reason, needs a colour that is only available in the dispenser of worker B, they must then synchronise: worker A must request the paint of worker B and worker B must respond by sending the required colour.

Key Idea

In our analogy, the paint dispenser represents access to the memory in your computer. Depending on how a program is written, access to data in memory can be synchronous or asynchronous. For the different dispensers case for your workers, however, think of the memory distributed on each node/computer of a cluster.

MPI vs OpenMP: What is the difference?

MPI OpenMP Defines an API, vendors provide an optimized (usually binary) library implementation that is linked using your choice of compiler. OpenMP is integrated into the compiler (e.g., gcc) and does not offer much flexibility in terms of changing compilers or operating systems unless there is an OpenMP compiler available for the specific platform. Offers support for C, Fortran, and other languages, making it relatively easy to port code by developing a wrapper API interface for a pre-compiled MPI implementation in a different language. Primarily supports C, C++, and Fortran, with limited options for other programming languages. Suitable for both distributed memory and shared memory (e.g., SMP) systems, allowing for parallelization across multiple nodes. Designed for shared memory systems and cannot be used for parallelization across multiple computers. Enables parallelism through both processes and threads, providing flexibility for different parallel programming approaches. Focuses solely on thread-based parallelism, limiting its scope to shared memory environments. Creation of process/thread instances and communication can result in higher costs and overhead. Offers lower overhead, as inter-process communication is handled through shared memory, reducing the need for expensive process/thread creation.

Parallel Paradigms

Thinking back to shared vs distributed memory models, how to achieve a parallel computation is divided roughly into two paradigms. Let’s set both of these in context:

- In a shared memory model, a data parallelism paradigm is typically used, as employed by OpenMP: the same operations are performed simultaneously on data that is shared across each parallel operation. Parallelism is achieved by how much of the data a single operation can act on.

- In a distributed memory model, a message passing paradigm is used, as employed by MPI: each CPU (or core) runs an independent program. Parallelism is achieved by receiving data which it doesn’t have, conducting some operations on this data, and sending data which it has.

This division is mainly due to historical development of parallel architectures: the first one follows from shared memory architecture like SMP (Shared Memory Processors) and the second from distributed computer architecture. A familiar example of the shared memory architecture is GPU (or multi-core CPU) architecture, and an example of the distributed computing architecture is a cluster of distributed computers. Which architecture is more useful depends on what kind of problems you have. Sometimes, one has to use both!

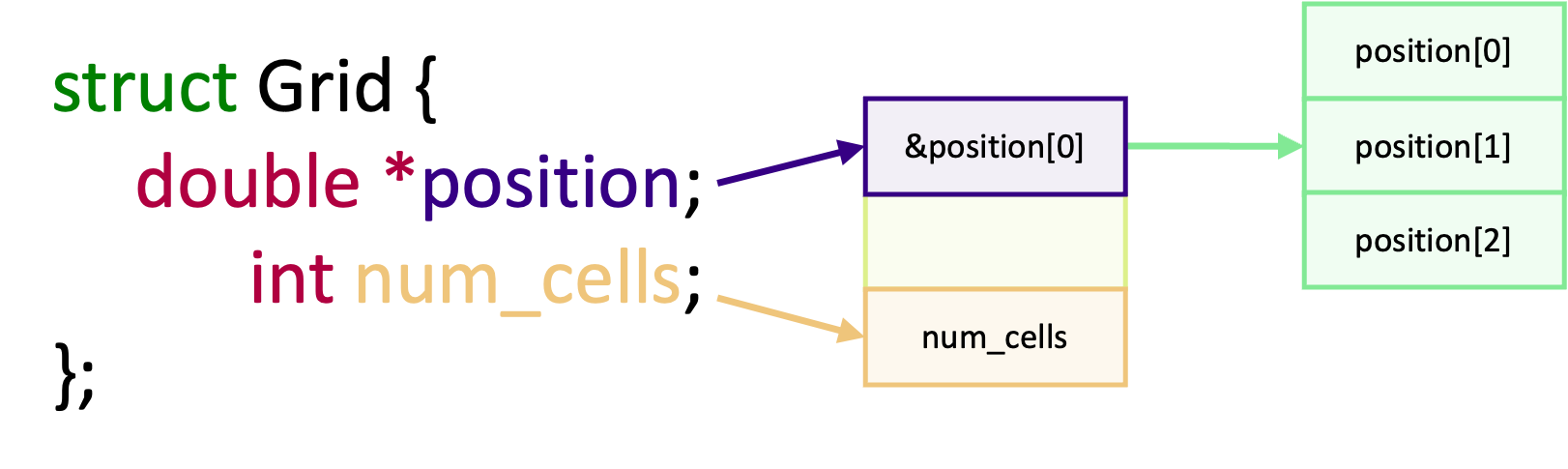

Consider a simple loop which can be sped up if we have many cores for illustration:

for (i = 0; i < N; ++i) {

a[i] = b[i] + c[i];

}

If we have N or more cores, each element of the loop can be computed in

just one step (for a factor of \(N\) speed-up). Let’s look into both paradigms in a little more detail, and focus on key characteristics.

1. Data Parallelism Paradigm

One standard method for programming using data parallelism is called “OpenMP” (for “Open MultiProcessing”). To understand what data parallelism means, let’s consider the following bit of OpenMP code which parallelizes the above loop:

#pragma omp parallel for for (i = 0; i < N; ++i) { a[i] = b[i] + c[i]; }Parallelization achieved by just one additional line,

#pragma omp parallel for, handled by the preprocessor in the compile stage, where the compiler “calculates” the data address off-set for each core and lets each one compute on a part of the whole data. This approach provides a convenient abstraction, and hides the underlying parallelisation mechanisms.Here, the catch word is shared memory which allows all cores to access all the address space. We’ll be looking into OpenMP later in this course. In Python, process-based parallelism is supported by the multiprocessing module.

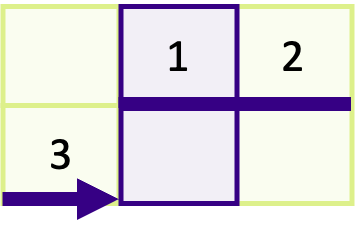

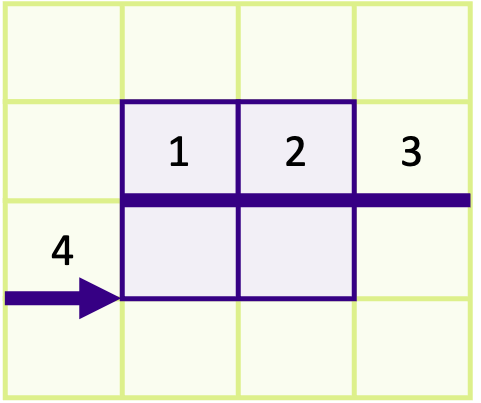

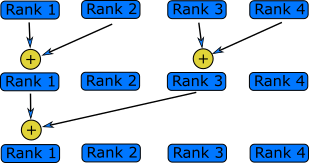

2. Message Passing Paradigm

In the message passing paradigm, each processor runs its own program and works on its own data. To work on the same problem in parallel, they communicate by sending messages to each other. Again using the above example, each core runs the same program over a portion of the data. For example, using this paradigm to parallelise the above loop instead:

for ( i = 0; i < m; ++i) { a[i] = b[i] + c[i]; }

- Other than changing the number of loops from

Ntom, the code is exactly the same.mis the reduced number of loops each core needs to do (if there areNcores,mis 1 (=N/N)). But the parallelization by message passing is not complete yet. In the message passing paradigm, each core operates independently from the other cores. So each core needs to be sent the correct data to compute, which then returns the output from that computation. However, we also need a core to coordinate the splitting up of that data, send portions of that data to other cores, and to receive the resulting computations from those cores.Summary

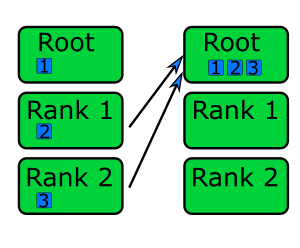

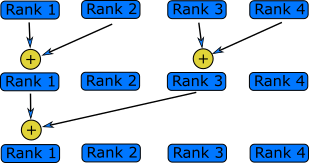

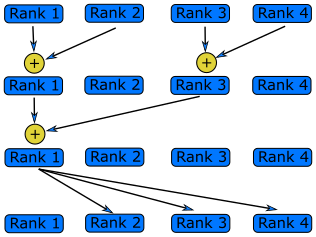

In the end, both data parallelism and message passing logically achieve the following:

Therefore, each rank essentially operates on its own set of data, regardless of paradigm. In some cases, there are advantages to combining data parallelism and message passing methods together, e.g. when there are problems larger than one GPU can handle. In this case, data parallelism is used for the portion of the problem contained within one GPU, and then message passing is used to employ several GPUs (each GPU handles a part of the problem) unless special hardware/software supports multiple GPU usage.

Algorithm Design

Designing a parallel algorithm that determines which of the two paradigms above one should follow rests on the actual understanding of how the problem can be solved in parallel. This requires some thought and practice.

To get used to “thinking in parallel”, we discuss “Embarrassingly Parallel” (EP) problems first and then we consider problems which are not EP problems.

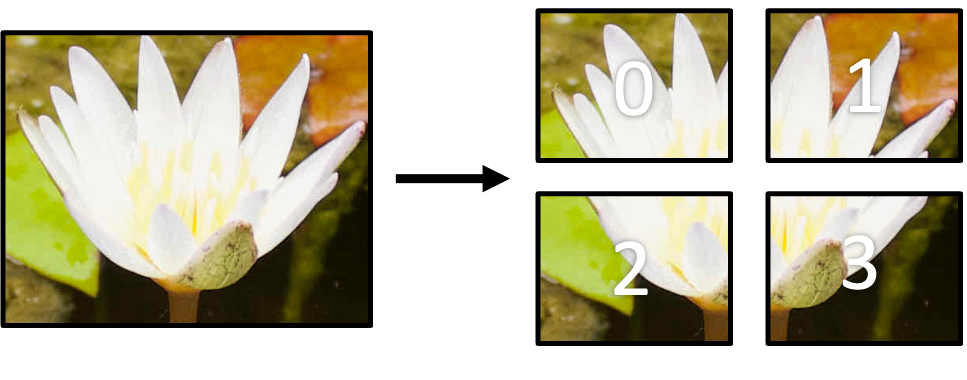

Embarrassingly Parallel Problems

Problems which can be parallelized most easily are EP problems, which occur in many Monte Carlo simulation problems and in many big database search problems. In Monte Carlo simulations, random initial conditions are used in order to sample a real situation. So, a random number is given and the computation follows using this random number. Depending on the random number, some computation may finish quicker and some computation may take longer to finish. And we need to sample a lot (like a billion times) to get a rough picture of the real situation. The problem becomes running the same code with a different random number over and over again! In big database searches, one needs to dig through all the data to find wanted data. There may be just one datum or many data which fit the search criterion. Sometimes, we don’t need all the data which satisfies the condition. Sometimes, we do need all of them. To speed up the search, the big database is divided into smaller databases, and each smaller databases are searched independently by many workers!

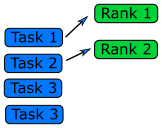

Queue Method

Each worker will get tasks from a predefined queue (a random number in a Monte Carlo problem and smaller databases in a big database search problem). The tasks can be very different and take different amounts of time, but when a worker has completed its tasks, it will pick the next one from the queue.

In an MPI code, the queue approach requires the ranks to communicate what they are doing to all the other ranks, resulting in some communication overhead (but negligible compared to overall task time).

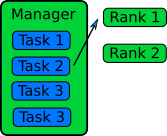

Manager / Worker Method

The manager / worker approach is a more flexible version of the queue method. We hire a manager to distribute tasks to the workers. The manager can run some complicated logic to decide which tasks to give to a worker. The manager can also perform any serial parts of the program like generating random numbers or dividing up the big database. The manager can become one of the workers after finishing managerial work.

In an MPI implementation, the main function will usually contain an

ifstatement that determines whether the rank is the manager or a worker. The manager can execute a completely different code from the workers, or the manager can execute the same partial code as the workers once the managerial part of the code is done. It depends on whether the managerial load takes a lot of time to finish or not. Idling is a waste in parallel computing!Because every worker rank needs to communicate with the manager, the bandwidth of the manager rank can become a bottleneck if administrative work needs a lot of information (as we can observe in real life). This can happen if the manager needs to send smaller databases (divided from one big database) to the worker ranks. This is a waste of resources and is not a suitable solution for an EP problem. Instead, it’s better to have a parallel file system so that each worker rank can access the necessary part of the big database independently.

General Parallel Problems (Non-EP Problems)

In general not all the parts of a serial code can be parallelized. So, one needs to identify which part of a serial code is parallelizable. In science and technology, many numerical computations can be defined on a regular structured data (e.g., partial differential equations in a 3D space using a finite difference method). In this case, one needs to consider how to decompose the domain so that many cores can work in parallel.

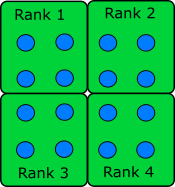

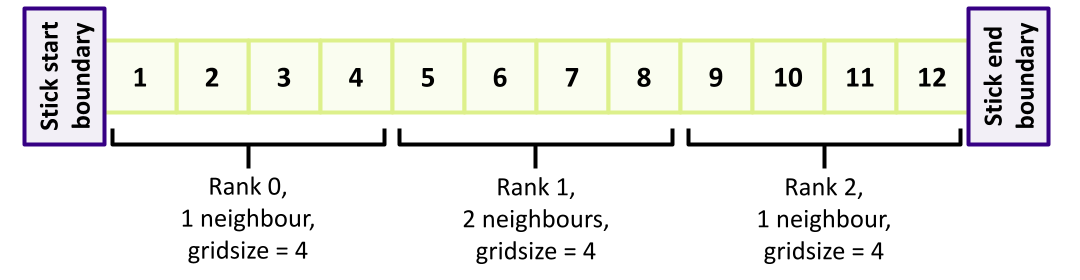

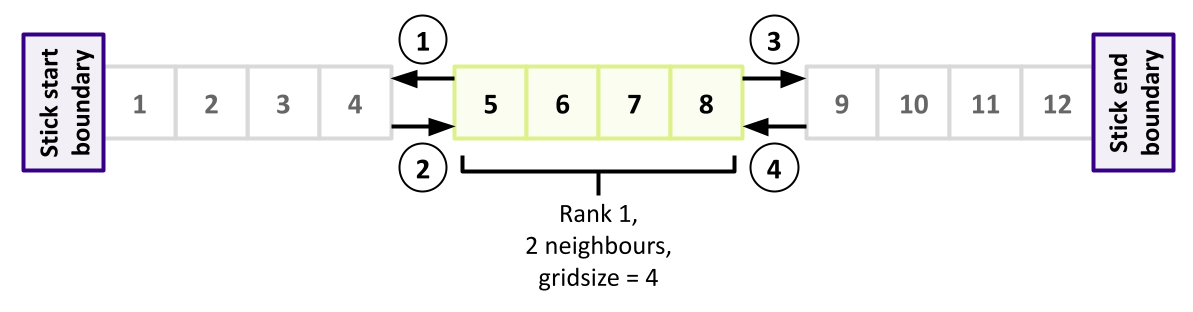

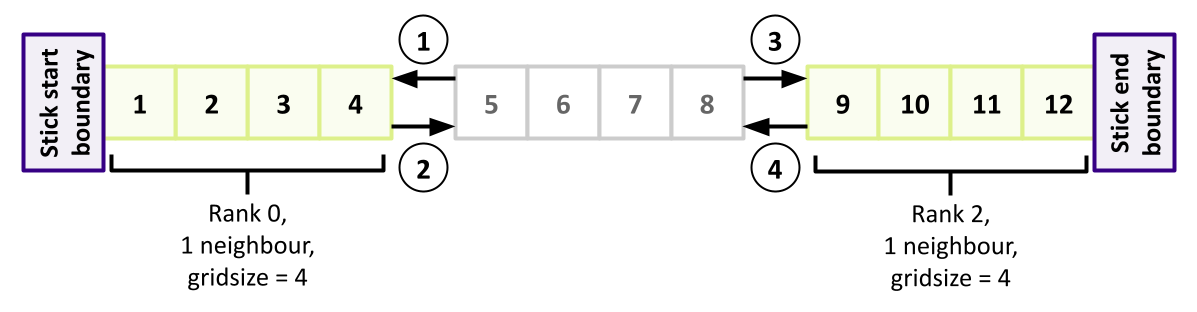

Domain Decomposition

When the data is structured in a regular way, such as when simulating atoms in a crystal, it makes sense to divide the space into domains. Each rank will handle the simulation within its own domain.

Many algorithms involve multiplying very large matrices. These include finite element methods for computational field theories as well as training and applying neural networks. The most common parallel algorithm for matrix multiplication divides the input matrices into smaller submatrices and composes the result from multiplications of the submatrices. If there are four ranks, the matrix is divided into four submatrices.

\[A = \left[ \begin{array}{cc}A_{11} & A_{12} \\ A_{21} & A_{22}\end{array} \right]\] \[B = \left[ \begin{array}{cc}B_{11} & B_{12} \\ B_{21} & B_{22}\end{array} \right]\] \[A \cdot B = \left[ \begin{array}{cc}A_{11} \cdot B_{11} + A_{12} \cdot B_{21} & A_{11} \cdot B_{12} + A_{12} \cdot B_{22} \\ A_{21} \cdot B_{11} + A_{22} \cdot B_{21} & A_{21} \cdot B_{12} + A_{22} \cdot B_{22}\end{array} \right]\]If the number of ranks is higher, each rank needs data from one row and one column to complete its operation.

Load Balancing

Even if the data is structured in a regular way and the domain is decomposed such that each core can take charge of roughly equal amounts of the sub-domain, the work that each core has to do may not be equal. For example, in weather forecasting, the 3D spatial domain can be decomposed in an equal portion. But when the sun moves across the domain, the amount of work is different in that domain since more complicated chemistry/physics is happening in that domain. Balancing this type of loads is a difficult problem and requires a careful thought before designing a parallel algorithm.

Serial and Parallel Regions

Identify the serial and parallel regions in the following algorithm:

vector_1[0] = 1; vector_1[1] = 1; for i in 2 ... 1000 vector_1[i] = vector_1[i-1] + vector_1[i-2]; for i in 0 ... 1000 vector_2[i] = i; for i in 0 ... 1000 vector_3[i] = vector_2[i] + vector_1[i]; print("The sum of the vectors is.", vector_3[i]);Solution

First Loop: Each iteration depends on the results of the previous two iterations in vector_1. So it is not parallelisable within itself.

Second Loop: Each iteration is independent and can be parallelised.

Third loop: Each iteration is independent within itself. While there are dependencies on vector_2[i] and vector_1[i], these dependencies are local to each iteration. This independence allows for the potential parallelization of the third loop by overlapping its execution with the second loop, assuming the results of the first loop are available or can be made available dynamically.

serial | vector_0[0] = 1; | vector_1[1] = 1; | for i in 2 ... 1000 | vector_1[i] = vector_1[i-1] + vector_1[i-2]; parallel | for i in 0 ... 1000 | vector_2[i] = i; parallel | for i in 0 ... 1000 | vector_3[i] = vector_2[i] + vector_1[i]; | print("The sum of the vectors is.", vector_3[i]);The first and the second loop could also run at the same time.

Key Points

Processes do not share memory and can reside on the same or different computers.

Threads share memory and reside in a process on the same computer.

MPI is an example of multiprocess programming whereas OpenMP is an example of multithreaded programming.

Algorithms can have both parallelisable and non-parallelisable sections.

There are two major parallelisation paradigms; data parallelism and message passing.

MPI implements the Message Passing paradigm, and OpenMP implements data parallelism.

Introduction to the Message Passing Interface

Overview

Teaching: 15 min

Exercises: 0 minQuestions

What is MPI?

How to run a code with MPI?

Objectives

Describe what the Message Passing Interface (MPI) is

Use the MPI API in a program

Compile and run MPI applications

Use MPI to coordinate the use of multiple processes across CPUs

Submit an MPI program to the Slurm batch scheduler

What is MPI?

MPI stands for Message Passing Interface and was developed in the early 1990s as a response to the need for a standardised approach to parallel programming. During this time, parallel computing systems were gaining popularity, featuring powerful machines with multiple processors working in tandem. However, the lack of a common interface for communication and coordination between these processors presented a challenge.

To address this challenge, researchers and computer scientists from leading vendors and organizations, including Intel, IBM, and Argonne National Laboratory, collaborated to develop MPI. Their collective efforts resulted in the release of the first version of the MPI standard, MPI-1, in 1994. This standardisation initiative aimed to provide a unified communication protocol and library for parallel computing.

MPI versions

Since its inception, MPI has undergone several revisions, each introducing new features and improvements:

- MPI-1 (1994): The initial release of the MPI standard provided a common set of functions, datatypes, and communication semantics. It formed the foundation for parallel programming using MPI.

- MPI-2 (1997): This version expanded upon MPI-1 by introducing additional features such as dynamic process management, one-sided communication, paralell I/O, C++ and Fortran 90 bindings. MPI-2 improved the flexibility and capabilities of MPI programs.

- MPI-3 (2012): MPI-3 brought significant enhancements to the MPI standard, including support for non-blocking collectives, improved multithreading, and performance optimizations. It also addressed limitations from previous versions and introduced fully compliant Fortran 2008 bindings. Moreover, MPI-3 completely removed the deprecated C++ bindings, which were initially marked as deprecated in MPI-2.2.

- MPI-4.0 (2021): On June 9, 2021, the MPI Forum approved MPI-4.0, the latest major release of the MPI standard. MPI-4.0 brings significant updates and new features, including enhanced support for asynchronous progress, improved support for dynamic and adaptive applications, and better integration with external libraries and programming models.

These revisions, along with subsequent updates and errata, have refined the MPI standard, making it more robust, versatile, and efficient.

Today, various MPI implementations are available, each tailored to specific hardware architectures and systems. Popular implementations like MPICH, Intel MPI, IBM Spectrum MPI, MVAPICH and Open MPI offer optimized performance, portability, and flexibility. For instance, MPICH is known for its efficient scalability on HPC systems, while Open MPI prioritizes extensive portability and adaptability, providing robust support for multiple operating systems, programming languages, and hardware platforms.

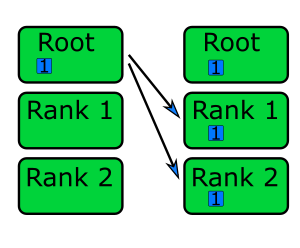

The key concept in MPI is message passing, which involves the explicit exchange of data between processes. Processes can send messages to specific destinations, broadcast messages to all processes, or perform collective operations where all processes participate. This message passing and coordination among parallel processes are facilitated through a set of fundamental functions provided by the MPI standard. Typically, their names start with MPI_ and followed by a specific function or datatype identifier. Here are some examples:

- MPI_Init: Initializes the MPI execution environment.

- MPI_Finalize: Finalises the MPI execution environment.

- MPI_Comm_rank: Retrieves the rank of the calling process within a communicator.

- MPI_Comm_size: Retrieves the size (number of processes) within a communicator.

- MPI_Send: Sends a message from the calling process to a destination process.

- MPI_Recv: Receives a message into a buffer from a source process.

- MPI_Barrier: Blocks the calling process until all processes in a communicator have reached this point.

It’s important to note that these functions represent only a subset of the functions provided by the MPI standard. There are additional functions and features available for various communication patterns, collective operations, and more. In the following episodes, we will explore these functions in more detail, expanding our understanding of MPI and how it enables efficient message passing and coordination among parallel processes.

In general, an MPI program follows a basic outline that includes the following steps:

- Initialization: The MPI environment is initialized using the

MPI_Init()function. This step sets up the necessary communication infrastructure and prepares the program for message passing. - Communication: MPI provides functions for sending and receiving messages between processes. The

MPI_Sendfunction is used to send messages, while theMPI_Recvfunction is used to receive messages. - Termination: Once the necessary communication has taken place, the MPI environment is finalised using the

MPI_Finalize()function. This ensures the proper termination of the program and releases any allocated resources.

Getting Started with MPI: MPI on HPC

HPC clusters typically have more than one version of MPI available, so you may need to tell it which one you want to use before it will give you access to it. First check the available MPI implementations/modules on the cluster using the command below:

module avail

This will display a list of available modules, including MPI implementations.

As for the next step, you should choose the appropriate MPI implementation/module from the

list based on your requirements and load it using module load <mpi_module_name>. For

example, if you want to load OpenMPI version 4.1.4, you can use (for example on COSMA):

module load openmpi/4.1.4

You may also need to load a compiler depending on your environment, and may get a warning as such. On COSMA for example, you need to do something like this beforehand:

module load gnu_comp/13.1.0

This sets up the necessary environment variables and paths for the MPI implementation and will give you access to the MPI library. If you are not sure which implementation/version of MPI you should use on a particular cluster, ask a helper or consult your HPC facility’s documentation.

Running a code with MPI

Let’s start with a simple C code that prints “Hello World!” to the console. Save the

following code in a file named hello_world.c

#include <stdio.h>

int main(int argc, char **argv) {

printf("Hello World!\n");

}

Although the code is not an MPI program, we can use the command mpicc to compile it. The

mpicc command is essentially a wrapper around the underlying C compiler, such as

gcc, providing additional functionality for compiling MPI programs. It simplifies the

compilation process by incorporating MPI-specific configurations and automatically linking

the necessary MPI libraries and header files. Therefore the below command generates an

executable file named hello_world .

mpicc -o hello_world hello_world.c

Now let’s try the following command:

mpiexec -n 4 ./hello_world

What if

mpiexecdoesn’t exist?If

mpiexecis not found, trympiruninstead. This is another common name for the command.When launching MPI applications and managing parallel processes, we often rely on commands like

mpiexecormpirun. Both commands act as wrappers or launchers for MPI applications, allowing us to initiate and manage the execution of multiple parallel processes across nodes in a cluster. While the behavior and features ofmpiexecandmpirunmay vary depending on the MPI implementation being used (such as OpenMPI, MPICH, MS MPI, etc.), they are commonly used interchangeably and provide similar functionality.It is important to note that

mpiexecis defined as part of the MPI standard, whereasmpirunis not. While some MPI implementations may use one name or the other, or even provide both as aliases for the same functionality,mpiexecis generally considered the preferred command. Although the MPI standard does not explicitly require MPI implementations to includempiexec, it does provide guidelines for its implementation. In contrast, the availability and options ofmpiruncan vary between different MPI implementations. To ensure compatibility and adherence to the MPI standard, it is recommended to primarily usempiexecas the command for launching MPI applications and managing parallel execution.

The expected output would be as follows:

Hello World!

Hello World!

Hello World!

Hello World!

What did

mpiexecdo?Just running a program with

mpiexeccreates several instances of our application. The number of instances is determined by the-nparameter, which specifies the desired number of processes. These instances are independent and execute different parts of the program simultaneously. Behind the scenes,mpiexecundertakes several critical tasks. It sets up communication between the processes, enabling them to exchange data and synchronize their actions. Additionally,mpiexectakes responsibility for allocating the instances across the available hardware resources, deciding where to place each process for optimal performance. It handles the spawning, killing, and naming of processes, ensuring proper execution and termination.

Using MPI in a Program

As we’ve just learned, running a program with mpiexec or mpirun results in the initiation of multiple instances, e.g. running our hello_world program across 4 processes:

mpirun -n 4 ./hello_world

However, in the example above, the program does not know it was started by mpirun, and each copy just works as if they were the only one. For the copies to work together, they need to know about their role in the computation, in order to properly take advantage of parallelisation. This usually also requires knowing the total number of tasks running at the same time.

- The program needs to call the

MPI_Init()function. MPI_Init()sets up the environment for MPI, and assigns a number (called the rank) to each process.- At the end, each process should also cleanup by calling

MPI_Finalize().

int MPI_Init(&argc, &argv);

int MPI_Finalize();

Both MPI_Init() and MPI_Finalize() return an integer.

This describes errors that may happen in the function.

Usually we will return the value of MPI_Finalize() from the main function

I don’t use command line arguments

If your main function has no arguments because your program doesn’t use any command line arguments, you can instead pass

NULLtoMPI_Init()instead.int main(void) { MPI_Init(NULL, NULL); return MPI_Finalize(); }

After MPI is initialized, you can find out the total number of ranks and the specific rank of the copy:

int num_ranks, my_rank;

int MPI_Comm_size(MPI_COMM_WORLD, &num_ranks);

int MPI_Comm_rank(MPI_COMM_WORLD, &my_rank);

Here, MPI_COMM_WORLD is a communicator, which is a collection of ranks that are able to exchange data

between one another. We’ll look into these in a bit more detail in the next episode, but essentially we use

MPI_COMM_WORLD which is the default communicator which refers to all ranks.

Here’s a more complete example:

#include <stdio.h>

#include <mpi.h>

int main(int argc, char **argv) {

int num_ranks, my_rank;

// First call MPI_Init

MPI_Init(&argc, &argv);

// Get total number of ranks and my rank

MPI_Comm_size(MPI_COMM_WORLD, &num_ranks);

MPI_Comm_rank(MPI_COMM_WORLD, &my_rank);

printf("My rank number is %d out of %d\n", my_rank, num_ranks);

// Call finalize at the end

return MPI_Finalize();

}

Compile and Run

Compile the above C code with

mpicc, then run the code withmpirun. You may find the output for each rank is returned out of order. Why is this?Solution

mpicc mpi_rank.c -o mpi_rank mpirun -n 4 mpi_rankYou should see something like (although the ordering may be different):

My rank number is 1 out of 4 My rank number is 2 out of 4 My rank number is 3 out of 4 My rank number is 0 out of 4The reason why the results are not returned in order is because the order in which the processes run is arbitrary. As we’ll see later, there are ways to synchronise processes to obtain a desired ordering!

Using MPI to Split Work Across Processes

We’ve seen how to use MPI within a program to determine the total number of ranks, as well as our own ranks. But how should we approach using MPI to split up some work between ranks so the work can be done in parallel? Let’s consider one simple way of doing this.

Let’s assume we wish to count the number of prime numbers between 1 and 100,000, and wished to split this workload

evenly between the number of CPUs we have available. We already know the number of total ranks num_ranks, our own

rank my_rank, and the total workload (i.e. 100,000 iterations), and using the information we can split the workload

evenly across our ranks. Therefore, given 4 CPUs, for each rank the work would be divided into 25,000 iterations per

CPU, as:

Rank 0: 1 - 25,000

Rank 1: 25,001 - 50,000

Rank 2: 50,001 - 75,000

Rank 3: 75,001 - 100,000

Note we start with rank 0, since MPI ranks start at zero.

We can work out the iterations to undertake for a given rank number, by working out:

- Work out the number of iterations per rank by dividing the total number of iterations we want to calculate by

num_ranks - Determine the start of the work iterations for this rank by multiplying our rank number by the iterations per rank, and adding 1

- Determine the end of the work iterations for this rank by working out the point just before the hypothetical start of the next rank

We could write this in C as:

// calculate how many iterations each rank will deal with

int iterations_per_rank = NUM_ITERATIONS / num_ranks;

int rank_start = (my_rank * iterations_per_rank) + 1;

int rank_end = (my_rank + 1) * iterations_per_rank;

We also need to cater for the case where work may not be distributed evenly across ranks, where the total work isn’t directly divisible by the number of CPUs. In which case, we adjust the last rank’s end of iterations to be the total number of iterations. This ensures the entire desired workload is calculated:

// catch cases where the work can't be split evenly

if (rank_end > NUM_ITERATIONS || (my_rank == (num_ranks-1) && rank_end < NUM_ITERATIONS)) {

rank_end = NUM_ITERATIONS;

}

Now we have this information, within a single rank we can perform the calculation for counting primes between our assigned subset of the problem, and output the result, e.g.:

// each rank is dealing with a subset of the problem between rank_start and rank_end

int prime_count = 0;

for (int n = rank_start; n <= rank_end; ++n) {

bool is_prime = true; // remember to include <stdbool.h>

// 0 and 1 are not prime numbers

if (n == 0 || n == 1) {

is_prime = false;

}

// if we can only divide n by i, then n is not prime

for (int i = 2; i <= n / 2; ++i) {

if (n % i == 0) {

is_prime = false;

break;

}

}

if (is_prime) {

prime_count++;

}

}

printf("Rank %d - primes between %d-%d is: %d\n", my_rank, rank_start, rank_end, prime_count);

To try this out, copy the full example code, compile and then run it:

mpicc -o count_primes count_primes.c

mpiexec -n 2 count_primes

Of course, this solution only goes so far. We can add the resulting counts from each rank together to get our final number of primes between 1 and 100,000, but what would be useful would be to have our code somehow retrieve the results from each rank and add them together, and output that overall result. More generally, ranks may need results from other ranks to complete their own computations. For this we would need ways for ranks to communicate - the primary benefit of MPI - which we’ll look at in subsequent episodes.

What About Python?

In MPI for Python (mpi4py), the initialization and finalization of MPI are handled by the library, and the user can perform MPI calls after

from mpi4py import MPI.

Submitting our Program to a Batch Scheduler

In practice, such parallel codes may well be executed on a local machine particularly during development. However, much greater computational power is often desired to reduce runtimes to tractable levels, by running such codes on HPC infrastructures such as DiRAC. These infrastructures make use of batch schedulers, such as Slurm, to manage access to distributed computational resources. So give our simple hello world example, how would we run this on an HPC batch scheduler such as Slurm?

Let’s create a slurm submission script called count_primes.sh. Open an editor (e.g. Nano) and type

(or copy/paste) the following contents:

#!/usr/bin/env bash

#SBATCH --account=yourProjectCode

#SBATCH --nodes=1

#SBATCH --ntasks-per-node=4

#SBATCH --time=00:01:00

#SBATCH --job-name=count_primes

module load gnu_comp/13.1.0

module load openmpi/4.1.4

mpiexec -n 4 ./count_primes

We can now submit the job using the sbatch command and monitor its status with squeue:

sbatch count_primes.sh

squeue -u yourUsername

Note the job id and check your job in the queue. Take a look at the output file when the job completes. You should see something like:

====

Starting job 6378814 at Mon 17 Jul 11:05:12 BST 2023 for user yourUsername.

Running on nodes: m7013

====

Rank 0 - count of primes between 1-25000: 2762

Rank 1 - count of primes between 25001-50000: 2371

Rank 2 - count of primes between 50001-75000: 2260

Rank 3 - count of primes between 75001-100000: 2199

Key Points

The MPI standards define the syntax and semantics of a library of routines used for message passing.

By default, the order in which operations are run between parallel MPI processes is arbitrary.

Communicating Data in MPI

Overview

Teaching: 15 min

Exercises: 5 minQuestions

How do I exchange data between MPI ranks?

Objectives

Differentiate between point-to-point and collective communication

List basic MPI data types

Describe the role of a communicator

List the communication modes for sending and receiving data

Differentiate between blocking and non-blocking communication

Describe and list possible causes of deadlock

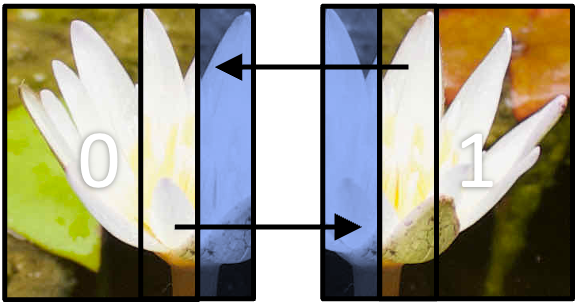

In previous episodes we’ve seen that when we run an MPI application, multiple independent processes are created which do their own work, on their own data, in their own private memory space. At some point in our program, one rank will probably need to know about the data another rank has, such as when combining a problem back together which was split across ranks. Since each rank’s data is private to itself, we can’t just access another rank’s memory and get what we need from there. We have to instead explicitly communicate data between ranks. Sending and receiving data between ranks form some of the most basic building blocks in any MPI application, and the success of your parallelisation often relies on how you communicate data.

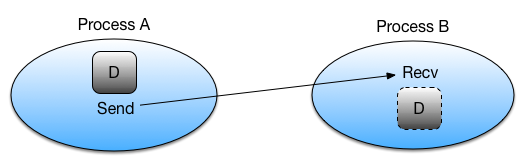

Communicating data using messages

MPI is a framework for passing data and other messages between independently running processes. If we want to share or access data from one rank to another, we use the MPI API to transfer data in a “message.” A message is a data structure which contains the data we want to send, and is usually expressed as a collection of data elements of a particular data type.

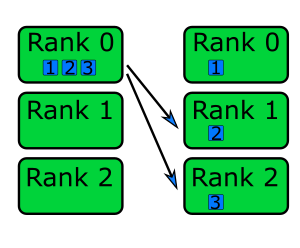

Sending and receiving data can happen happen in two patterns. We either want to send data from one specific rank to another, known as point-to-point communication, or to/from multiple ranks all at once to a single or multiples targets, known as collective communication. Whatever we do, we always have to explicitly “send” something and to explicitly “receive” something. Data communication can’t happen by itself. A rank can’t just get data from one rank, and ranks don’t automatically send and receive data. If we don’t program in data communication, data isn’t exchanged. Unfortunately, none of this communication happens for free, either. With every message sent, there is an overhead which impacts the performance of your program. Often we won’t notice this overhead, as it is usually quite small. But if we communicate large data or small amounts too often, those (small) overheads add up into a noticeable performance hit.

To get an idea of how communication typically happens, imagine we have two ranks: rank A and rank B. If rank A wants to send data to rank B (e.g., point-to-point), it must first call the appropriate MPI send function which typically (but not always, as we’ll find out later) puts that data into an internal buffer; known as the send buffer or the envelope. Once the data is in the buffer, MPI figures out how to route the message to rank B (usually over a network) and then sends it to B. To receive the data, rank B must call a data receiving function which will listen for messages being sent to it. In some cases, rank B will then send an acknowledgement to say that the transfer has finished, similar to read receipts in e-mails and instant messages.

Check your understanding

Consider a simulation where each rank calculates the physical properties for a subset of cells on a very large grid of points. One step of the calculation needs to know the average temperature across the entire grid of points. How would you approach calculating the average temperature?

Solution

There are multiple ways to approach this situation, but the most efficient approach would be to use collective operations to send the average temperature to a main rank which performs the final calculation. You can, of course, also use a point-to-point pattern, but it would be less efficient, especially with a large number of ranks.

If the simulation wasn’t done in parallel, or was instead using shared-memory parallelism, such as OpenMP, we wouldn’t need to do any communication to get the data required to calculate the average.

MPI data types

When we send a message, MPI needs to know the size of the data being transferred. The size is not the number of bytes of data being sent, as you may expect, but is instead the number of elements of a specific data type being sent. When we send a message, we have to tell MPI how many elements of “something” we are sending and what type of data it is. If we don’t do this correctly, we’ll either end up telling MPI to send only some of the data or try to send more data than we want! For example, if we were sending an array and we specify too few elements, then only a subset of the array will be sent or received. But if we specify too many elements, than we are likely to end up with either a segmentation fault or undefined behaviour! And the same can happen if we don’t specify the correct data type.

There are two types of data type in MPI: “basic” data types and derived data types. The basic data types are in essence

the same data types we would use in C such as int, float, char and so on. However, MPI doesn’t use the same

primitive C types in its API, using instead a set of constants which internally represent the data types. These data

types are in the table below:

| MPI basic data type | C equivalent |

|---|---|

| MPI_SHORT | short int |

| MPI_INT | int |

| MPI_LONG | long int |

| MPI_LONG_LONG | long long int |

| MPI_UNSIGNED_CHAR | unsigned char |

| MPI_UNSIGNED_SHORT | unsigned short int |

| MPI_UNSIGNED | unsigned int |

| MPI_UNSIGNED_LONG | unsigned long int |

| MPI_UNSIGNED_LONG_LONG | unsigned long long int |

| MPI_FLOAT | float |

| MPI_DOUBLE | double |

| MPI_LONG_DOUBLE | long double |

| MPI_BYTE | char |

Remember, these constants aren’t the same as the primitive types in C, so we can’t use them to create variables, e.g.,

MPI_INT my_int = 1;

is not valid code because, under the hood, these constants are actually special data structures used by MPI. Therefore we can only them as arguments in MPI functions.

Don’t forget to update your types

At some point during development, you might change an

intto alongor afloatto adouble, or something to something else. Once you’ve gone through your codebase and updated the types for, e.g., variable declarations and function arguments, you must do the same for MPI functions. If you don’t, you’ll end up running into communication errors. It could be helpful to define compile-time constants for the data types and use those instead. If you ever do need to change the type, you would only have to do it in one place, e.g.:// define constants for your data types #define MPI_INT_TYPE MPI_INT #define INT_TYPE int // use them as you would normally INT_TYPE my_int = 1;

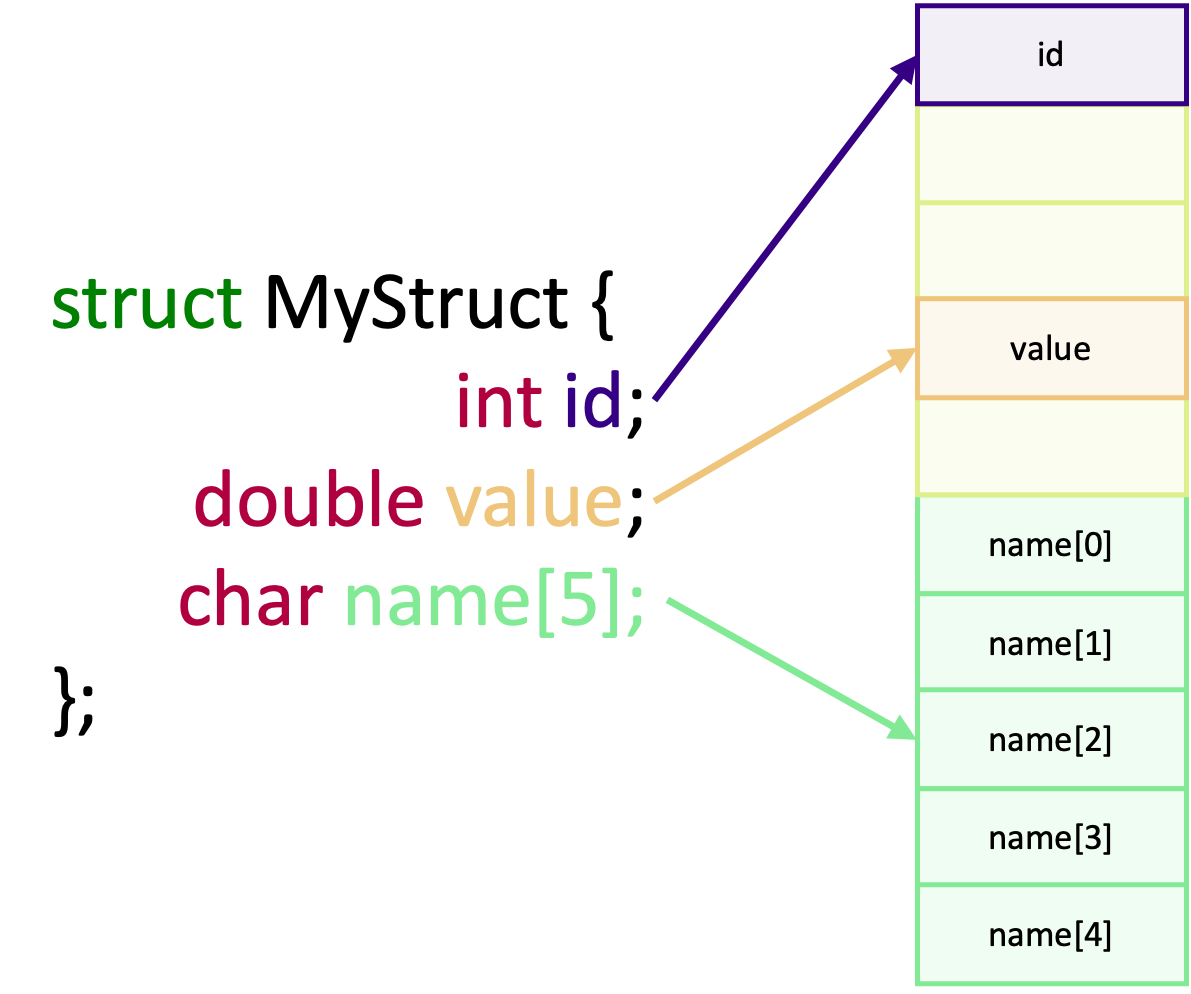

Derived data types, on the other hand, are very similar to C structures which we define by using the basic MPI data types. They’re often useful to group together similar data in communications, or when you need to send a structure from one rank to another. This will be covered in more detail in the Advanced Communication Techniques episode.

What type should you use?

For the following pieces of data, what MPI data types should you use?

a[] = {1, 2, 3, 4, 5};a[] = {1.0, -2.5, 3.1456, 4591.223, 1e-10};a[] = "Hello, world!";Solution

The fact that

a[]is an array does not matter, because all of the elemnts ina[]will be the same data type. In MPI, as we’ll see in the next episode, we can either send a single value or multiple values (in an array).

MPI_INTMPI_DOUBLE-MPI_FLOATwould not be correct asfloat’s contain 32 bits of data whereasdouble’s are 64 bit.MPI_BYTEorMPI_CHAR- you may want to use strlen to calculate how many elements ofMPI_CHARbeing sent

Communicators

All communication in MPI is handled by something known as a communicator. We can think of a communicator as being a

collection of ranks which are able to exchange data with one another. What this means is that every communication

between two (or more) ranks is linked to a specific communicator. When we run an MPI application, every rank will belong

to the default communicator known as MPI_COMM_WORLD. We’ve seen this in earlier episodes when, for example, we’ve used

functions like MPI_Comm_rank() to get the rank number,

int my_rank;

MPI_Comm_rank(MPI_COMM_WORLD, &my_rank); // MPI_COMM_WORLD is the communicator the rank belongs to

In addition to MPI_COMM_WORLD, we can make sub-communicators and distribute ranks into them. Messages can only be sent

and received to and from the same communicator, effectively isolating messages to a communicator. For most applications,

we usually don’t need anything other than MPI_COMM_WORLD. But organising ranks into communicators can be helpful in

some circumstances, as you can create small “work units” of multiple ranks to dynamically schedule the workload, create

one communicator for each physical hardware node on a HPC cluster, or to help compartmentalise the problem into smaller

chunks by using a virtual cartesian topology.

Throughout this course, we will stick to using MPI_COMM_WORLD.

Communication modes

When sending data between ranks, MPI will use one of four communication modes: synchronous, buffered, ready or standard. When a communication function is called, it takes control of program execution until the send-buffer is safe to be re-used again. What this means is that it’s safe to re-use the memory/variable you passed without affecting the data that is still being sent. If MPI didn’t have this concept of safety, then you could quite easily overwrite or destroy any data before it is transferred fully! This would lead to some very strange behaviour which would be hard to debug. The difference between the communication mode is when the buffer becomes safe to re-use. MPI won’t guess at which mode should be used. That is up to the programmer. Therefore each mode has an associated communication function:

| Mode | Blocking function |

|---|---|

| Synchronous | MPI_SSend() |

| Buffered | MPI_Bsend() |

| Ready | MPI_Rsend() |

| Standard | MPI_Send() |

In contrast to the four modes for sending data, receiving data only has one mode and therefore only a single function.

| Mode | MPI Function |

|---|---|

| Standard | MPI_Recv() |

Synchronous sends

In synchronous communication, control is returned when the receiving rank has received the data and sent back, or “posted”, confirmation that the data has been received. It’s like making a phone call. Data isn’t exchanged until you and the person have both picked up the phone, had your conversation and hung the phone up.

Synchronous communication is typically used when you need to guarantee synchronisation, such as in iterative methods or time dependent simulations where it is vital to ensure consistency. It’s also the easiest communication mode to develop and debug with because of its predictable behaviour.

Buffered sends

In a buffered send, the data is written to an internal buffer before it is sent and returns control back as soon as the

data is copied. This means MPI_Bsend() returns before the data has been received by the receiving rank, making this an

asynchronous type of communication as the sending rank can move onto its next task whilst the data is transmitted. This

is just like sending a letter or an e-mail to someone. You write your message, put it in an envelope and drop it off in

the postbox. You are blocked from doing other tasks whilst you write and send the letter, but as soon as it’s in the

postbox, you carry on with other tasks and don’t wait for the letter to be delivered!

Buffered sends are good for large messages and for improving the performance of your communication patterns by taking advantage of the asynchronous nature of the data transfer.

Ready sends

Ready sends are different to synchronous and buffered sends in that they need a rank to already be listening to receive a message, whereas the other two modes can send their data before a rank is ready. It’s a specialised type of communication used only when you can guarantee that a rank will be ready to receive data. If this is not the case, the outcome is undefined and will likely result in errors being introduced into your program. The main advantage of this mode is that you eliminate the overhead of having to check that the data is ready to be sent, and so is often used in performance critical situations.

You can imagine a ready send as like talking to someone in the same room, who you think is listening. If they are listening, then the data is transferred. If it turns out they’re absorbed in something else and not listening to you, then you may have to repeat yourself to make sure your transmit the information you wanted to!

Standard sends

The standard send mode is the most commonly used type of send, as it provides a balance between ease of use and performance. Under the hood, the standard send is either a buffered or a synchronous send, depending on the availability of system resources (e.g. the size of the internal buffer) and which mode MPI has determined to be the most efficient.

Which mode should I use?

Each communication mode has its own use cases where it excels. However, it is often easiest, at first, to use the standard send,

MPI_Send(), and optimise later. If the standard send doesn’t meet your requirements, or if you need more control over communication, then pick which communication mode suits your requirements best. You’ll probably need to experiment to find the best!

Communication mode summary

Mode Description Analogy MPI Function Synchronous Returns control to the program when the message has been sent and received successfully. Making a phone call MPI_Ssend()Buffered Returns control immediately after copying the message to a buffer, regardless of whether the receive has happened or not. Sending a letter or e-mail MPI_Bsend()Ready Returns control immediately, assuming the matching receive has already been posted. Can lead to errors if the receive is not ready. Talking to someone you think/hope is listening MPI_Rsend()Standard Returns control when it’s safe to reuse the send buffer. May or may not wait for the matching receive (synchronous mode), depending on MPI implementation and message size. Phone call or letter MPI_Send()

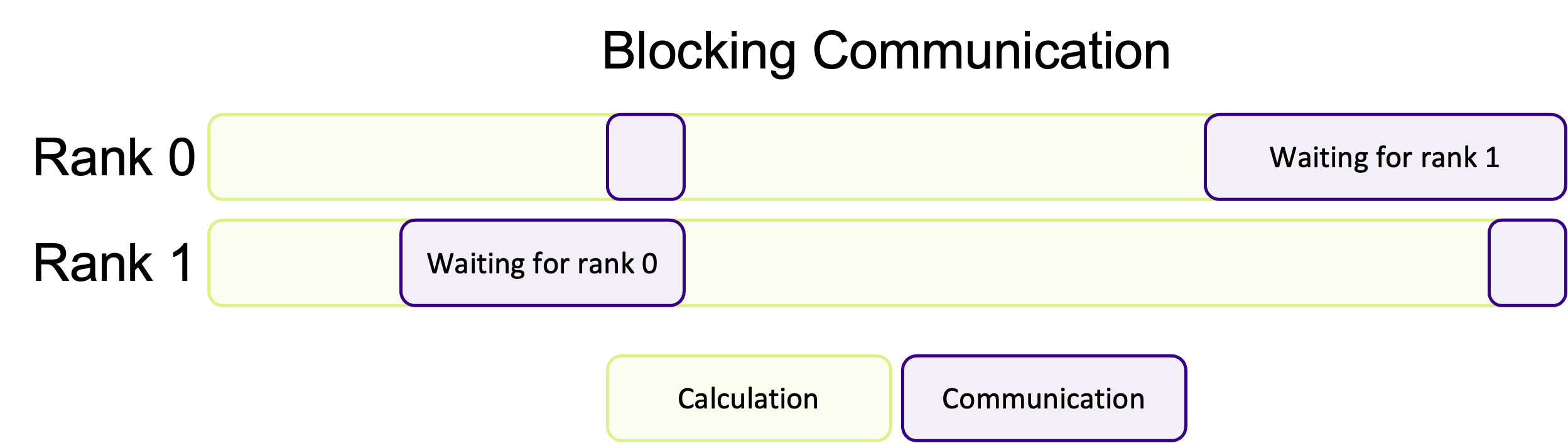

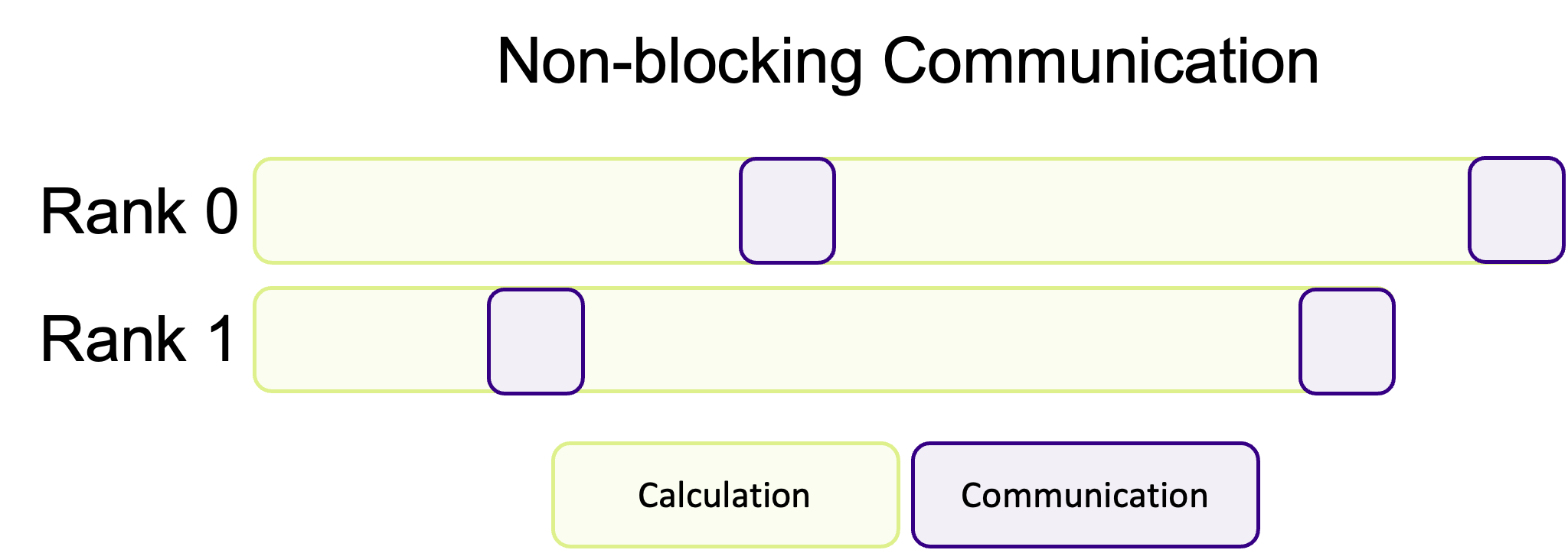

Blocking and non-blocking communication

In addition to the communication modes, communication is done in two ways: either by blocking execution until the communication is complete (like how a synchronous send blocks until an receive acknowledgment is sent back), or by returning immediately before any part of the communication has finished, with non-blocking communication. Just like with the different communication modes, MPI doesn’t decide if it should use blocking or non-blocking communication calls. That is, again, up to the programmer to decide. As we’ll see in later episodes, there are different functions for blocking and non-blocking communication.

A blocking synchronous send is one where the message has to be sent from rank A, received by B and an acknowledgment sent back to A before the communication is complete and the function returns. In the non-blocking version, the function returns immediately even before rank A has sent the message or rank B has received it. It is still synchronous, so rank B still has to tell A that it has received the data. But, all of this happens in the background so other work can continue in the foreground which data is transferred. It is then up to the programmer to check periodically if the communication is done – and to not modify/use the data/variable/memory before the communication has been completed.

Is

MPI_Bsend()non-blocking?The buffered communication mode is a type of asynchronous communication, because the function returns before the data has been received by another rank. But, it’s not a non-blocking call unless you use the non-blocking version

MPI_Ibsend()(more on this later). Even though the data transfer happens in the background, allocating and copying data to the send buffer happens in the foreground, blocking execution of our program. On the other hand,MPI_Ibsend()is “fully” asynchronous because even allocating and copying data to the send buffer happens in the background.

One downside to blocking communication is that if rank B is never listening for messages, rank A will become deadlocked. A deadlock happens when your program hangs indefinitely because the send (or receive) is unable to complete. Deadlocks occur for a countless number of reasons. For example, we may forget to write the corresponding receive function when sending data. Or a function may return earlier due to an error which isn’t handled properly, or a while condition may never be met creating an infinite loop. Ranks can also can silently, making communication with them impossible, but this doesn’t stop any attempts to send data to crashed rank.

Avoiding communication deadlocks

A common piece of advice in C is that when allocating memory using

malloc(), always write the accompanying call tofree()to help avoid memory leaks by forgetting to deallocate the memory later. You can apply the same mantra to communication in MPI. When you send data, always write the code to receive the data as you may forget to later and accidentally cause a deadlock.

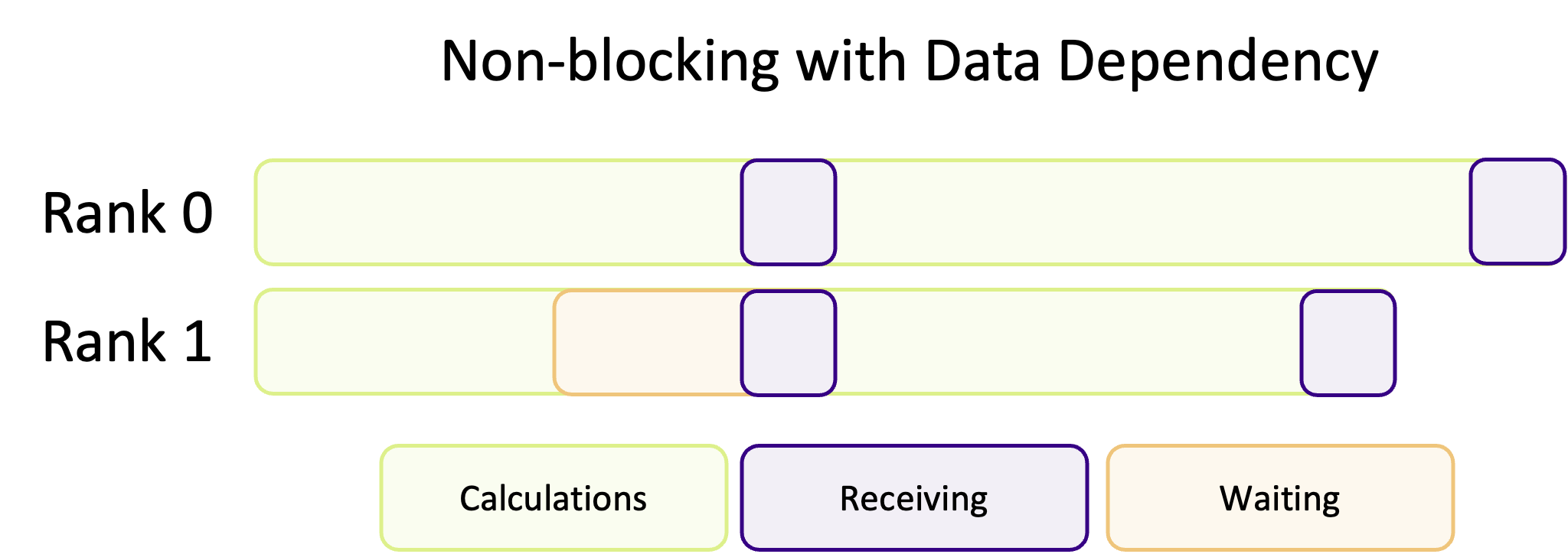

Blocking communication works best when the work is balanced across ranks, so that each rank has an equal amount of things to do. A common pattern in scientific computing is to split a calculation across a grid and then to share the results between all ranks before moving onto the next calculation. If the workload is well balanced, each rank will finish at roughly the same time and be ready to transfer data at the same time. But, as shown in the diagram below, if the workload is unbalanced, some ranks will finish their calculations earlier and begin to send their data to the other ranks before they are ready to receive data. This means some ranks will be sitting around doing nothing whilst they wait for the other ranks to become ready to receive data, wasting computation time.

If most of the ranks are waiting around, or one rank is very heavily loaded in comparison, this could massively impact the performance of your program. Instead of doing calculations, a rank will be waiting for other ranks to complete their work.

Non-blocking communication hands back control, immediately, before the communication has finished. Instead of your program being blocked by communication, ranks will immediately go back to the heavy work and instead periodically check if there is data to receive (which is up to the programmer) instead of waiting around. The advantage of this communication pattern is illustrated in the diagram below, where less time is spent communicating.

This is a common pattern where communication and calculations are interwoven with one another, decreasing the amount of “dead time” where ranks are waiting for other ranks to communicate data. Unfortunately, non-blocking communication is often more difficult to successfully implement and isn’t appropriate for every algorithm. In most cases, blocking communication is usually easier to implement and to conceptually understand, and is somewhat “safer” in the sense that the program cannot continue if data is missing. However, the potential performance improvements of overlapping communication and calculation is often worth the more difficult implementation and harder to read/more complex code.

Should I use blocking or non-blocking communication?

When you are first implementing communication into your program, it’s advisable to first use blocking synchronous sends to start with, as this is arguably the easiest to use pattern. Once you are happy that the correct data is being communicated successfully, but you are unhappy with performance, then it would be time to start experimenting with the different communication modes and blocking vs. non-blocking patterns to balance performance with ease of use and code readability and maintainability.

MPI communication in everyday life?

We communicate with people non-stop in everyday life, whether we want to or not! Think of some examples/analogies of blocking and non-blocking communication we use to talk to other people.

Solution

Probably the most common example of blocking communication in everyday life would be having a conversation or a phone call with someone. The conversation can’t happen and data can’t be communicated until the other person responds or picks up the phone. Until the other person responds, we are stuck waiting for the response.

Sending e-mails or letters in the post is a form of non-blocking communication we’re all familiar with. When we send an e-mail, or a letter, we don’t wait around to hear back for a response. We instead go back to our lives and start doing tasks instead. We can periodically check our e-mail for the response, and either keep doing other tasks or continue our previous task once we’ve received a response back from our e-mail.

Key Points

Data is sent between ranks using “messages”

Messages can either block the program or be sent/received asynchronously

Knowing the exact amount of data you are sending is required

Point-to-Point Communication

Overview

Teaching: 10 min

Exercises: 10 minQuestions

How do I send data between processes?

Objectives

Describe what is meant by point-to-point communication

Describe the arguments to MPI_Send() and MPI_Recv()

Use MPI_Send() to send a message to another rank

Use MPI_Recv() to receive a message from another rank

Write, compile and run a complete program that sends a message from one rank to another

In the previous episode we introduced the various types of communication in MPI.

In this section we will use the MPI library functions MPI_Send() and

MPI_Recv(), which employ point-to-point communication, to send data from one rank to another.

Let’s look at how MPI_Send() and MPI_Recv()are typically used:

- Rank A decides to send data to rank B. It first packs the data to send into a buffer, from which it will be taken.

- Rank A then calls

MPI_Send()to create a message for rank B. The underlying MPI communication is then given the responsibility of routing the message to the correct destination. - Rank B must know that it is about to receive a message and acknowledge this

by calling

MPI_Recv(). This sets up a buffer for writing the incoming data when it arrives and instructs the communication device to listen for the message.

As mentioned in the previous episode, MPI_Send() and MPI_Recv() are synchronous operations,

and will not return until the communication on both sides is complete.

Sending a Message: MPI_Send

The MPI_Send() function is defined as follows:

int MPI_Send(

void *data,

int count,

MPI_Datatype datatype,

int destination,

int tag,

MPI_Comm communicator)

data: |

Pointer to the start of the data being sent. We would not expect this to change, hence it’s defined as const |

count: |

Number of elements to send |

datatype: |

The type of the element data being sent, e.g. MPI_INTEGER, MPI_CHAR, MPI_FLOAT, MPI_DOUBLE, … |

destination: |

The rank number of the rank the data will be sent to |

tag: |

An message tag (integer), which is used to differentiate types of messages. We can specify 0 if we don’t need different types of messages |

communicator: |

The communicator, e.g. MPI_COMM_WORLD as seen in previous episodes |

For example, if we wanted to send a message that contains "Hello, world!\n" from rank 0 to rank 1, we could state

(assuming we were rank 0):

char *message = "Hello, world!\n";

MPI_Send(message, 14, MPI_CHAR, 1, 0, MPI_COMM_WORLD);

So we are sending 14 elements of MPI_CHAR one time, and specified 0 for our message tag since we don’t anticipate

having to send more than one type of message. This call is synchronous, and will block until the corresponding

MPI_Recv() operation receives and acknowledges receipt of the message.

MPI_Ssend: an Alternative to MPI_Send

MPI_Send()represents the “standard mode” of sending messages to other ranks, but some aspects of its behaviour are dependent on both the implementation of MPI being used, and the circumstances of its use: there are three scenarios to consider:

- The message is directly passed to the receive buffer, in which case the communication has completed

- The send message is buffered within some internal MPI buffer but hasn’t yet been received

- The function call waits for a corresponding receiving process

In scenarios 1 & 2, the call is able to return immediately, but with 3 it may block until the recipient is ready to receive. It is dependent on the MPI implementation as to what scenario is selected, based on performance, memory, and other considerations.

A very similar alternative to

MPI_Send()is to useMPI_Ssend()- synchronous send - which ensures the communication is both synchronous and blocking. This function guarantees that when it returns, the destination has categorically started receiving the message.

Receiving a Message: MPI_Recv

Conversely, the MPI_Recv() function looks like the following:

int MPI_Recv(

void *data,

int count,

MPI_Datatype datatype,

int source,

int tag,

MPI_Comm communicator,

MPI_Status *status)

data: |

Pointer to where the received data should be written |

count: |

Maximum number of elements to receive |

datatype: |

The type of the data being received |

source: |

The number of the rank sending the data |

tag: |

A message tag (integer), which must either match the tag in the sent message, or if MPI_ANY_TAG is specified, a message with any tag will be accepted |

communicator: |

The communicator (we have used MPI_COMM_WORLD in earlier examples) |

status: |

A pointer for writing the exit status of the MPI command, indicating whether the operation succeeded or failed |

Continuing our example, to receive our message we could write:

char message[15];

MPI_Status status;

MPI_Recv(message, 14, MPI_CHAR, 0, 0, MPI_COMM_WORLD, &status);

Here, we create our buffer to receive the data, as well as a variable to hold the exit status of the receive operation.

We then call MPI_Recv(), specifying our returned data buffer, the number of elements we will receive (14) which will be

of type MPI_CHAR and sent by rank 0, with a message tag of 0.

As with MPI_Send(), this call will block - in this case until the message is received and acknowledgement is sent

to rank 0, at which case both ranks will proceed.

Let’s put this together with what we’ve learned so far.

Here’s an example program that uses MPI_Send() and MPI_Recv() to send the string "Hello World!"

from rank 0 to rank 1:

#include <stdio.h>

#include <mpi.h>

int main(int argc, char **argv) {

int rank, n_ranks;

// First call MPI_Init

MPI_Init(&argc, &argv);

// Check that there are two ranks

MPI_Comm_size(MPI_COMM_WORLD, &n_ranks);

if (n_ranks != 2) {

printf("This example requires exactly two ranks\n");

MPI_Finalize();

return 1;

}

// Get my rank

MPI_Comm_rank(MPI_COMM_WORLD,&rank);

if (rank == 0) {

char *message = "Hello, world!\n";

MPI_Send(message, 14, MPI_CHAR, 1, 0, MPI_COMM_WORLD);

}

if (rank == 1) {

char message[14];

MPI_Status status;

MPI_Recv(message, 14, MPI_CHAR, 0, 0, MPI_COMM_WORLD, &status);

printf("%s",message);

}

// Call finalize at the end

return MPI_Finalize();

}

MPI Data Types in C

In the above example we send a string of characters and therefore specify the type

MPI_CHAR. For a complete list of types, see the MPICH documentation.

Try It Out

Compile and run the above code. Does it behave as you expect?

Solution

mpicc mpi_hello_world.c -o mpi_hello_world mpirun -n 2 mpi_hello_worldNote above that we specified only 2 ranks, since that’s what the program requires (see line 12). You should see:

Hello, world!

What Happens If…

Try modifying, compiling, and re-running the code to see what happens if you…

- Change the tag integer of the sent message. How could you resolve this where the message is received?

- Modify the element count of the received message to be smaller than that of the sent message. How could you resolve this in how the message is sent?

Solution

- The program will hang since it’s waiting for a message with a tag that will never be sent (press

Ctrl-Cto kill the hanging process). To resolve this, make the tag inMPI_Recv()match the tag you specified inMPI_Send().- You will likely see a message like the following:

[...:220695] *** An error occurred in MPI_Recv [...:220695] *** reported by process [2456485889,1] [...:220695] *** on communicator MPI_COMM_WORLD [...:220695] *** MPI_ERR_TRUNCATE: message truncated [...:220695] *** MPI_ERRORS_ARE_FATAL (processes in this communicator will now abort, [...:220695] *** and potentially your MPI job)You could resolve this by sending a message of equal size, truncating the message. A related question is whether this fix makes any sense!

Many Ranks

Change the above example so that it works with any number of ranks. Pair even ranks with odd ranks and have each even rank send a message to the corresponding odd rank.

Solution

#include <stdio.h> #include <mpi.h> int main(int argc, char **argv) { int rank, n_ranks, my_pair; // First call MPI_Init MPI_Init(&argc, &argv); // Get the number of ranks MPI_Comm_size(MPI_COMM_WORLD,&n_ranks); // Get my rank MPI_Comm_rank(MPI_COMM_WORLD,&rank); // Figure out my pair if (rank % 2 == 1) { my_pair = rank - 1; } else { my_pair = rank + 1; } // Run only if my pair exists if (my_pair < n_ranks) { if (rank % 2 == 0) { char *message = "Hello, world!\n"; MPI_Send(message, 14, MPI_CHAR, my_pair, 0, MPI_COMM_WORLD); } if (rank % 2 == 1) { char message[14]; MPI_Status status; MPI_Recv(message, 14, MPI_CHAR, my_pair, 0, MPI_COMM_WORLD, &status); printf("%s",message); } } // Call finalize at the end return MPI_Finalize(); }

Hello Again, World!

Modify the Hello World code below so that each rank sends its message to rank 0. Have rank 0 print each message.

#include <stdio.h> #include <mpi.h> int main(int argc, char **argv) { int rank; int message[30]; // First call MPI_Init MPI_Init(&argc, &argv); // Get my rank MPI_Comm_rank(MPI_COMM_WORLD, &rank); // Print a message using snprintf and then printf snprintf(message, 30, "Hello World, I'm rank %d", rank); printf("%s\n", message); // Call finalize at the end return MPI_Finalize(); }Solution

#include <stdio.h> #include <mpi.h> int main(int argc, char **argv) { int rank, n_ranks, numbers_per_rank; // First call MPI_Init MPI_Init(&argc, &argv); // Get my rank and the number of ranks MPI_Comm_rank(MPI_COMM_WORLD, &rank); MPI_Comm_size(MPI_COMM_WORLD, &n_ranks); if (rank != 0) { // All ranks other than 0 should send a message char message[30]; sprintf(message, "Hello World, I'm rank %d\n", rank); MPI_Send(message, 30, MPI_CHAR, 0, 0, MPI_COMM_WORLD); } else { // Rank 0 will receive each message and print them for( int sender = 1; sender < n_ranks; sender++ ) { char message[30]; MPI_Status status; MPI_Recv(message, 30, MPI_CHAR, sender, 0, MPI_COMM_WORLD, &status); printf("%s",message); } } // Call finalize at the end return MPI_Finalize(); }

Blocking

Try the code below with two ranks and see what happens. How would you change the code to fix the problem?

Note: If you are using MPICH, this example might work. With OpenMPI it shouldn’t!

#include <mpi.h> #define ARRAY_SIZE 3 int main(int argc, char **argv) { MPI_Init(&argc, &argv); int rank; MPI_Comm_rank(MPI_COMM_WORLD, &rank); const int comm_tag = 1; int numbers[ARRAY_SIZE] = {1, 2, 3}; MPI_Status recv_status; if (rank == 0) { // synchronous send: returns when the destination has started to // receive the message MPI_Ssend(&numbers, ARRAY_SIZE, MPI_INT, 1, comm_tag, MPI_COMM_WORLD); MPI_Recv(&numbers, ARRAY_SIZE, MPI_INT, 1, comm_tag, MPI_COMM_WORLD, &recv_status); } else { MPI_Ssend(&numbers, ARRAY_SIZE, MPI_INT, 0, comm_tag, MPI_COMM_WORLD); MPI_Recv(&numbers, ARRAY_SIZE, MPI_INT, 0, comm_tag, MPI_COMM_WORLD, &recv_status); } return MPI_Finalize(); }Solution

MPI_Send()will block execution until the receiving process has calledMPI_Recv(). This prevents the sender from unintentionally modifying the message buffer before the message is actually sent. Above, both ranks callMPI_Send()and just wait for the other to respond. The solution is to have one of the ranks receive its message before sending.Sometimes

MPI_Send()will actually make a copy of the buffer and return immediately. This generally happens only for short messages. Even when this happens, the actual transfer will not start before the receive is posted.For this example, let’s have rank 0 send first, and rank 1 receive first. So all we need to do to fix this is to swap the send and receive for rank 1: